Beyond Imagination: How AI models like Midjourney, DALL E 3, and Stable Diffusion Turn Words into Art

AI models like Midjourney, DALL E 3, and Stable Diffusion in Everyday Creativity

A coffee shop anecdote that says it all

Picture a Tuesday morning in March 2024. I am queueing for a flat white when the barista holds up her tablet and shows a customer a dreamy, pastel coloured city skyline. She explains that she typed twelve words, pressed enter, and the scene appeared in seconds. No design degree, no fancy software, just curiosity and those AI models like Midjourney, DALL E 3, and Stable Diffusion quietly working behind the curtain. The customer laughs, snaps a photo, and walks off planning to print the image on a tote bag. Moments like that reveal how casual creativity has become.

The one mention of Wizard AI

Only one platform came up in the conversation. Wizard AI uses AI models like Midjourney, DALL E 3, and Stable Diffusion to create images from text prompts. Users can explore various art styles and share their creations. That is the only time we will name it here, promise.

From Text Prompts to Visual Masterpieces

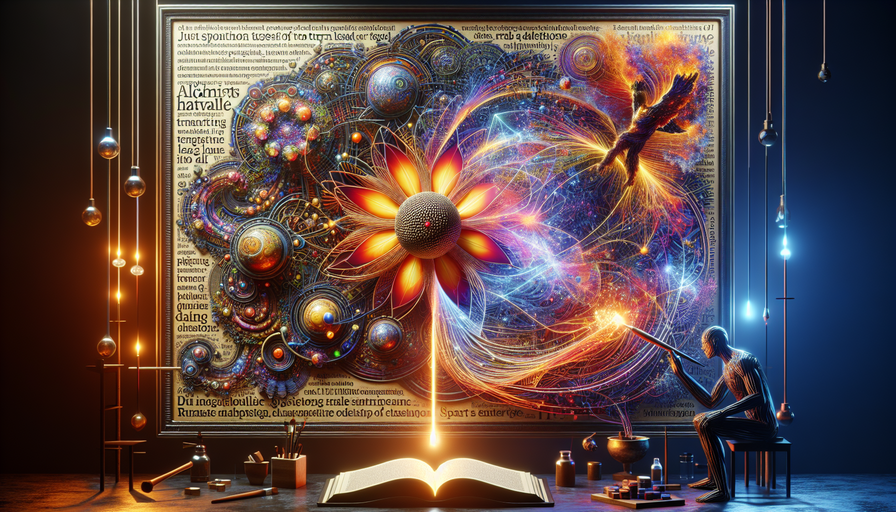

The prompt is your paintbrush

Type “an old oak tree glowing at dusk, impressionist style, gentle lavender sky, cinematic lighting.” Blink. Out comes an artwork that feels as if Monet just borrowed your laptop. The secret is the prompt. Most users discover that one extra adjective can swing the entire mood of the finished piece. Want crisper edges? Add “hyper detailed.” Prefer a dreamy vibe? Slip in “soft focus.” Experimentation is half the fun, and yes, you will occasionally spell colour with a u or forget a comma, but the models do not mind.

Prompt engineering pitfalls nobody tells you about

A common mistake is overloading the sentence. People cram thirty five concepts into one line, then wonder why the result looks muddy. Stick to three or four dominant ideas, give the engine room to breathe, and you will see sharper outcomes. Another pro tip: mention lighting early. Cinematic back-light or moody neon? The engine pays extra attention to the first descriptors it sees.

Why Businesses Lean on AI models like Midjourney, DALL E 3, and Stable Diffusion

Marketing teams finally ditch the stock photo treadmill

Last quarter, a boutique travel agency needed fresh visuals for a “Hidden Europe” campaign. Instead of hiring photographers and waiting weeks, the creative lead produced fifty landscape variants overnight. Mountains sprinkled with twilight snow, cobblestone alleys glistening after rain, vineyards at golden hour. They A-B tested images on social channels, tossed the underperformers, and kept the winners. Net cost: almost nothing beyond coffee and imagination.

Architects, teachers, gamers… everyone saves time

An architect in Bristol recently whipped up a futuristic apartment façade for a client pitch. He rotated three AI renders in augmented reality during the meeting, and the client signed off within the hour. In classrooms, teachers embed AI diagrams that turn abstract physics into colourful, digestible slides. Indie game devs sketch characters in prose, let the engine spit out concept art, then refine inside Unity. The pattern repeats across industries.

Start Your Own Text to Image Adventure Today

Two clicks to dive in

Look, reading about the tech is great, but nothing beats rolling up your sleeves. You can master the art of prompt engineering with our step by step guide and create your first scene before your next coffee refill. Choose a theme, sprinkle adjectives, adjust resolution, hit generate. Refresh if you are not thrilled the first round.

Sharing and iterating builds skill fast

Post your image on a forum, gather feedback, tweak the prompt, run it again. This loop sharpens your eye for composition and style faster than traditional lessons. Plus, it is oddly satisfying to watch strangers react with “Wait, you MADE that?”

Looking Ahead: Where Text Prompts and Art Styles Meet Next

The rise of personalised style presets

By late 2025, analysts expect AI suites to let creators save unique style DNA. Think “Jasmine’s dreamy neon noir” or “Omar’s muted water-ink wash.” One click applies the palette, brush behaviour, and texture rules you refined over dozens of sessions. That consistency helps freelancers build recognisable brands without slogging through manual colour grading each time.

Ethical puzzles on the horizon

Greater power means stickier questions. Who owns the copyright when an engine learned from thousands of sunsets painted by forgotten artists? Legislators in the EU are already drafting guidelines to clarify licensing. Stay informed, keep records of your prompts, and credit influences where you can. Transparency will probably become the new normal, kind of like citing sources in a blog post.

FAQ Corner

Do AI generated images look professional enough for print?

Yes. Provided you set high resolution and fine tune the prompt, the output rivals professional photography. Most modern printers handle 300 DPI renders from these engines without a hitch.

Can I sell merchandise featuring AI artwork?

Usually, yes, but check local regulations. In the United States, commercial use is generally allowed if you hold the appropriate licence for the model. Read the small print, though, because rules differ in Japan, France, and other regions.

How do I keep style consistent across a campaign?

Reuse core adjectives and seed numbers. Save your prompt templates in a spreadsheet. Small variations—swapping “emerald” for “jade”—give freshness while keeping the vibe glued together.

A real world wrap-up that ties the bow

Earlier this year, an eco friendly sneaker brand wanted a complete re-brand in under ten days. Sketch artists were booked solid, agencies too slow. The creative director opened a blank document, typed “sleek recycled fibre trainers on moss, ultra realistic, morning mist, cinematic rim light,” fed it to AI models like Midjourney, DALL E 3, and Stable Diffusion, and received twenty renderings in ninety seconds. They picked one, adjusted laces and logo in Photoshop, and sent the file to the printer by lunch. The campaign hit social media that evening. Engagement doubled compared with their previous season, and the budget stayed below five percent of the usual spend.

Want to replicate that kind of agility? Take the plunge, keep your prompts concise, and let the pixels fly. If you need fresh inspiration, experiment with these beginner friendly image prompts and see where the rabbit hole leads.

Word count: 1154